Recovery from a Bing de-indexing

This has been quite a journey and as professional Technical SEO, quite the challenge.

I have been using Bing Webmaster Tools for many years, mostly as a confirmation tool when comparing issues with Google Search Console. As Bing tends to only provide 5% or less of organic search results it hasn't been that important to keep track of whats going on.

Why Bing is becoming more important

However with the introduction of Large Language Models (LLMs) and the Bing index being used to populate various AI tools, it has become more important that your site is being indexed by Bing. Bing is also used by some other search engines to complement their own databases DuckDuck go being one of these.

Something's up

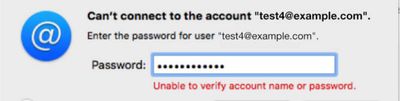

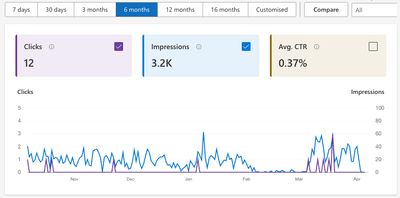

In January 2025 I checked how my personal website was doing and there wasn't a great deal of impressions or clicks - which is fairly normal. I checked the home page uint the URL inspection tool and realised there was a bit of an issue!

It should normally have a couple of green ticks stating that the page is Indexed successfully, URL can appear on Bing and No SEO issue found - that's where we need to be.

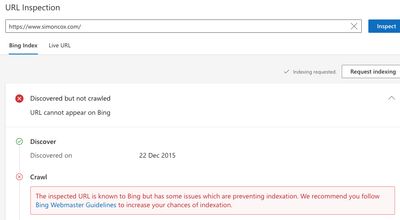

I checked the Live Page tab:

Odd - the URL can be indexed by Bing.

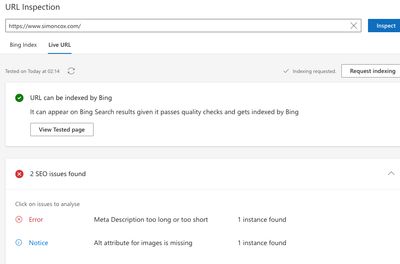

The Crawl and Indexing report

I checked the Crawl and indexing report which is in Search Performance last position in the Filter by drop down menu.

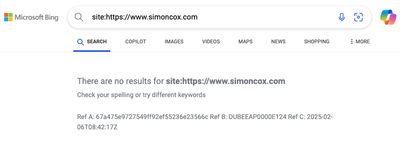

Site check

So I did a site check in Bing Search: and sure enough there were no results in the Search Engine Results Page (SERP). None of my sites pages were in the index at all - disaster!

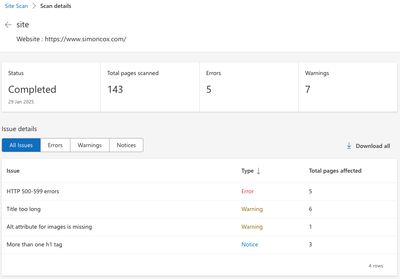

Site scans

Bing Webmaster tools allows you to run and keep, up top 5 separate Site Scans that are useful for troubleshooting.

Five http 500 errors was concerning.

The information on what actual errors were occurring and which URLs just sin't provided. None of the crawls I ran, using Sitebulb or Screaming Frog, were giving me any errors at all so I could only put this down to Bing crawler errors at the time of running. Five URLs with server errors would not course the whole site to be de-indexed though, would they?

Manually adding pages to the index

I began trying to resolve this by manually adding the pages using the URL Inspection and Requesting indexing. This should normally work, and is good practice when you publish a new page.

I did this for 37 pages and then left it for a week or so and then checked to see if they had been indexed. No they had not.

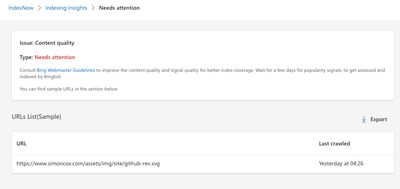

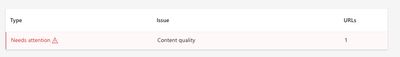

In the IndexNow section in indexing Insights I was able to find some details.

It appeared the issue was Content Quality.

Content quality

Content quality is always a worry though. There isn't any AI written content on the site - you can tell that by my deplorable spelling mistakes.

A while back I published a short, Cobbles of Mousehole, a few days before the start of the decline in the indexed pages. It contained several paragraphs lifted from online news articles and I may have been auto penalised for plagiarism, but if you read the piece it is a review of those articles. So I considered that I might have fallen foul of the Guidelines and replaced the live text with screenshots. I believed that my content was squeaky clean at that point.

Not the first to have been de-indexed

So I had a look around the web and found that a lot of peoples websites had been de-indexed by Bing - this wasn't a niche issue that I had. Fortunately there was plenty of advice to hand on how to get your site re-indexed. The one that I found to be most helpful at this stage was Deindexed in Bing?.

To summarise the tips for a quick for for Bing De-indexed sites:

- Confirm whether you have a Bing indexation problem

- Have a working XML sitemap file

- Check your robots.txt for weird issues

- Make sure your site 404s correctly

- Setup a Bing Webmaster Tools account

- Log a ticket with Bing support

- Check your Uptime + other technical SEO elements

My Results from that checklist:

- Yes I do have an issue - no pages are showing in Bing search.

- XML file works fine. Tested with SEO Testiong XML Sitemap Validator

- Robots.txt validates in Bing webmaster tools.

- Seems to be ok - I got the correct responses in testing

- Already done.

- Not going to do that if I can help it.

- Uptime should be ok - Netlify is a CDN… Speed test all ok.

When checking the 404 page I checked the headers and noticed there were three missing headers but on comparing with some other sites that I knew were not de-indexed there was no difference.

Sitemaps

I deleted an old sitemap entry and resubmitted the main sitemap and also added the rss feed in the Submit Sitemap section.

Blocking AI crawlers

At this time I was blocking all known AI crawlers using the ai.robots.txt list was this the problem. I knew Bing were testing Co-pilot with a view to adding it to search, and now has been launched, and did wonder if by blocking AI crawlers If might be running fowl of some terms and conditions that I had not found.

I took those out of my robots.txt file, deployed and left it a week. No change.

At that point I thought nearly everything else has been ruled out. So it time to raise a Ticket with Bing.

Bing Help ticket

So I did raise a ticket with Webmasters Support. and explained what I had done and waited for their response.

Bing support replied

"After further review, it appears that your site https://www.simoncox.com/ did not meet the standards set by Bing the last time it was crawled. Testing: https://www.simoncox.com/post/2024-05-28-log-store-build/ Bing URL Inspection states: The inspected URL is known to Bing but has some issues which are preventing indexation. We recommend you follow Bing Webmaster Guidelines to increase your chances of indexation."

I wasn't sure that their response was that helpful.

More changes and a switch in hosting

I made some more subtle changes to the site and ran the Bing webmaster site scan several times to eliminate all issues. I was able to identify a 500 error while doing this so ended up switching the site from Netlify to Cloudflare hosting. This didn't take too long and I now have the site deploying to both so with a switch of the DNS, managed through hover.com, I can flick between them if needed.

After the hosting migration the Bing Site scan gave me no errors.

Redirect issues with Cloudflare

Having moved to Cloudflare I checked a few things and some redirects were not working. What is good is that the _redirect files works on both Netlify and Cloudflare, even the splats. Cloudflare state that you can have 2000 static and 100 dynamic redirects on the free plan - but anything after about 100 static didn’t work for me. So I had to work them into splats to get below the threshold.

With all that done I waited a week and again raised a support request. This time I got a really quick reply:

“After further review, it appears that your site https://www.simoncox.com/ did not meet the standards set by Bing the last time it was crawled.” ...

IndexNow

One feature that Bing has is IndexNow which allows a webmaster to get pages indexed almost instantaneously and shared with other Search Engines using the IndexNow system. Delightfully Cloudflare can do this for you automatically in their Crawler Hints - just switch it on.

Having moved the site to Cloudflare I can make use of their IndexNow feature! Had to shift theDNS to Cloudflare as well so that I could turn on Crawler Hints to get the IndexNow feature. Then had to turn on Custom domain in Cloudflare pages and reactivate the site as I think it got turned off when I switched the DNS.

In no time at all I had 839 URLs submitted to the index.

What happened next was a surprise! There was a massive rise in errors - of course there would be as the pages were getting indexed but this got me thinking.

HTML validation

I checked the site on the W3C Nu HTML checker tool and I had the Fathom Analytics script inserted after the closing body tag and before the closing html tag. Years and years ago this was ok. Google thinks its ok - well it wasn't de-indexing the site so I presume it forgave me and got on with the job in hand.

Apparently that was a change in HTML 4.01 (1999) and I had forgotten. I looked back through my deployment sand I had switched the Fathom script from the head to the base of the html just before the site was de-indexed. Coincidence? Had I found the cause?

I moved the Fathom code inside the body tag, ensured the html validated and then resubmitted all the page urls using the URL submission tool.

Finally success and pages started to get indexed

I kept a check on progress and suddenly the number of pages crawled started going up daily.

The site started appearing in the SERPs again!

Here we go, good content for people to read and enjoy back in the SERPs where they belong.

interesting differences between the layouts depending on your search terms!

Conclusion

It is a bit of an odd one this. For years we have been bowing our heads to Google and its ways. Google have constantly stated that they really do not care if your web page is valid or not. (Though it really does matter if you break the head with a body tag.) This is probably for the best as there are many web pages out there that don't validate but still provide the user with a good experience. Bing however is a little more fussy and I applaud it for that. I like pages to be written with valid html as it helps other developers sort your code out when they need to and for people learning html to understand how it works.

I let my standards slip though and suffered for it (well 5% less people visiting wasn't really suffering but my fragile ego was complaining).

And I was done. Or at least I thought I was...

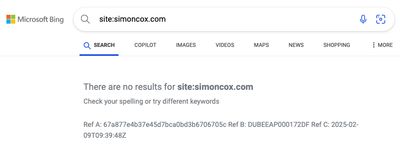

The aftermath of the Bing de-indexing

When I started writing this article a search on Bing with the site operator for my site was providing a full list of the pages. As of today there is nothing showing again - yet the webmaster tools is showing impressions and clicks as well as the pages being in the index. Google has some of my content ranking really well but since the initial euphoria of the pages appearing in the Bing SERP again I think the new site honeymoon period may be over as nothing is cutting the mustard for Bing.

Again I think I know what may have done it this time and have made a change and booked a test in for next week. I will update this article if I have fixed it again.

I am Simon Cox. I really am.

In looking at the SERPs today I though that the new Copilot might shed some light on whats indexed on the site. I asked it to tell me about simoncox.com and it clearly mentioned Technical SEO but links to a different Simon Cox - who happens to be a Microsoft Regional Director... Am I being blacklisted now? A conspiracy tin foil hat has been ordered. Back to the keyboard.

Previous post: Creating a blogroll page from Mastodon bookmarks